A short framing article about energy use and AI, just released

On February 10th, Veolia and Microsoft released a report on AI and energy, water, and waste management. One of the short chapters in that report is a framing article about AI and energy use by me and Eric Masanet at UC Santa Barbara.

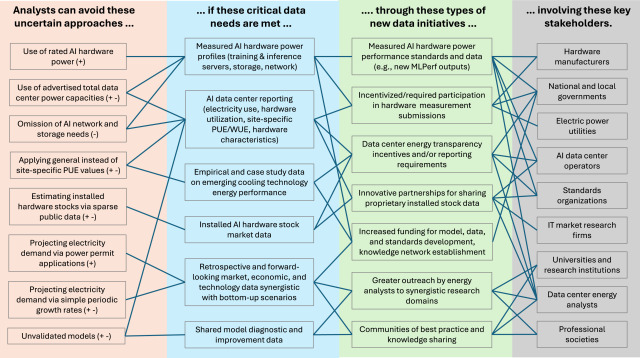

The article distinguishes three ways AI might affect energy use:

• Direct effects of AI operations

• Effects of applying AI to energy-related activities

• Interactive systemic effects of AI on the broader economy

Figure 1 from the article illustrates these three potential effects and the uncertainties affecting each of them.

Here's the abstract:

Many recent assessments of the effects of artificial intelligence (AI) systems lack rigor. The electricity use and emissions of AI operations are often viewed as the most salient issues, but use of AI systems can have important effects when they are deployed, and such deployments can lead to complicated systemic interactions between AI systems, the broader energy system, and the economy as a whole.

All effects of AI deployment are subject to deep uncertainty, but analyzing the effects of AI operations is usually the most feasible. Human understanding of the effects of AI deployments on specific domains and on interactions with the broader economy is in its infancy, but we know that these effects could either increase societal energy use (e.g., by making fossil fuel or geothermal extraction cheaper, or fueling increased consumer consumption by more targeted advertising) or decrease societal energy use (e.g., by enabling deployment of batteries to increase renewable energy adoption, which is more efficient than thermal plants on a primary energy basis, or improving efficiency throughout the broader economy). It is impossible to know in advance the sign of the net effect over the long term.

For these less well understood effects, researchers should design consistent test cases, focusing on measuring economic, energetic, and environmental parameters before and after the deployment of new AI systems. For testing interactions, new kinds of large-scale economic models may be needed, as current models do not represent the effects of technology changes in a sufficiently detailed and systematic way.

The full reference is

Koomey, Jonathan, and Eric Masanet. 2026. Understanding AI energy use (part of a special report on AI for energy, water and waste management). Veolia and Microsoft. February 10. [https://www.institut.veolia.org/en/publications/veolia-institute-review-facts-reports/ai-energy-water-and-waste-management]

Addendum (February 13, 2026)

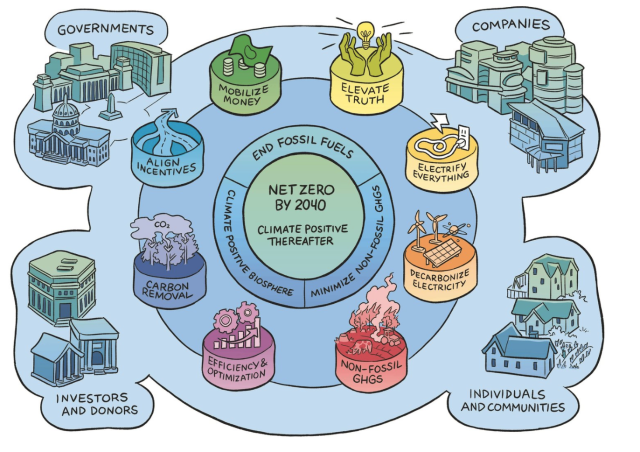

The three ways AI affects energy use mirror the structure from this excellent article.

Kaack, Lynn H., Priya L. Donti, Emma Strubell, George Kamiya, Felix Creutzig, and David Rolnick. 2022. "Aligning artificial intelligence with climate change mitigation." Nature Climate Change. vol. 12, no. 6. 2022/06/01. pp. 518-527. [https://doi.org/10.1038/s41558-022-01377-7]

In much earlier drafts (a few years ago) we referenced this one and should have done it in the final article, but somehow that reference got dropped after many iterations. Apologies to those authors, we will make sure we include that reference in any follow-on work.